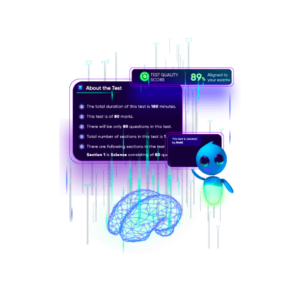

Auto Generation Of Tests

Get ready to ace your exams with our cutting-edge Auto Test Generation tool. Whether you are preparing for competitive exams or school tests, our platform allows you to generate personalised tests with maximum customisation quickly. With our advanced Test Quality Score algorithm, you can be assured of high-quality questions that accurately assess your knowledge. With the Create Your Own Test feature, you can select specific chapters and difficulty levels for specific goals and exams to create personalised tests tailored to your needs.

The aim of Automated Test Generation is to automate the generation of high-quality, personalised tests by institutes and students to save the teachers’ precious time and avoid individual bias.

Most students lack access to the best resources due to cost and demographics. Also, the time of a teacher is critical. With the help of Auto Test Generation, we are trying to help teachers spend more time teaching than generating papers. Developing a high-quality test paper is a challenging process when done manually.

To address this problem, we have developed an intelligent system which considers multiple parameters to design test papers:

- Syllabus Coverage,

- Topic Weightage,

- Difficulty Levels,

- Previous Year Trends,

- Different Types of Questions,

- Concept Mastery of the User (Personalisation)

- Behavioral Profile of User (Personalization)

This utility helps the teachers to generate test papers in a quick time with maximum customisation. At the same time, it ensures high quality, which is checked using the Test Quality Score algorithm developed by Embibe.

Test Quality Score and Question Discrimination Factor

The Test Quality Score is used to check if the test generated is as per the standards selected by the teacher/institute regarding the difficulty and coverage of chapters.

Item Response Theory estimates the discrimination factor of questions using user-question attempts data. Questions with high discrimination are given more priority while making question selections, improving the overall quality of the test.

Create Your Own Test

We have developed the Create Your Own Test feature, which helps the student create the test for a specific goal and exam customised using custom-selected chapters and difficulty levels. This test is generated using different optimization algorithms to satisfy the constraints selected by the student and is personalized to the student.

Diagnostic Test

A good diagnostic test is one that can (i) discriminate between students of different abilities for a given skill set, (ii) be consistent with ground truth data and (iii) achieve this with as few assessment questions as possible. We create diagnostic tests as part of the Personalised Achievement Journey for a student to help diagnose how much the student has mastered the concepts essential to achieve the goal. This is done using 2 tests:

- Prerequisite Test: Gives questions corresponding to the fundamental prerequisite concepts required to achieve a goal selected by the student.

- Achieve Test: Gives questions covering the most important concepts for the specified exam and goal.

References

- Dhavala, Soma, Chirag Bhatia, Joy Bose, Keyur Faldu, and Aditi Avasthi. “Auto Generation of Diagnostic Assessments and Their Quality Evaluation.” International Educational Data Mining Society (2020).

- Desai, Nishit, Keyur Faldu, Achint Thomas, and Aditi Avasthi. “System and method for generating an assessment paper and measuring the quality thereof.” U.S. Patent Application 16/684,434, filed October 1, 2020.

- Vincent LeBlanc, Michael A. A. Cox, “Interpretation of the point-biserial correlation coefficient in the context of a school examination,” January 2017, The Quantitative Methods for Psychology 13(1):46-56

- Linden, W. D., and R. Hambleton. “Handbook of Modern Item Response Theory.” (1997), Biometrics 54:1680

- Ronald K Hambleton and Wim J Linden. Handbook of modern item response theory. Volume two: Statistical tools. CRC Press, USA, 2016.

- Guang Cen, Yuxiao Dong, Wanlin Gao, Lina Yu, Simon See, Qing Wang, Ying Yang, and Hongbiao Jiang. An implementation of an automatic examination paper generation system. Mathematical and Computer Modelling, 51, 2010.

- “Autogeneration of Diagnostic Test and Their Quality Evaluation – EDM:2020”, EDM 2020 presentation, Jul 2020, https://www.youtube.com/watch?v=7wZz0ckqWFs

- Lalwani, Amar, and Sweety Agrawal. “Validating revised bloom’s taxonomy using deep knowledge tracing.” In International Conference on Artificial Intelligence in Education, pp. 225-238. Springer, Cham, 2018.

- Faldu, Keyur, Aditi Avasthi, and Achint Thomas. “Adaptive learning machine for score improvement and parts thereof.” U.S. Patent 10,854,099, issued December 1, 2020.

- Donda, Chintan, Sayan Dasgupta, Soma S. Dhavala, Keyur Faldu, and Aditi Avasthi. “A framework for predicting, interpreting, and improving Learning Outcomes.” arXiv preprint arXiv:2010.02629 (2020).

- Faldu, Keyur, Achint Thomas, and Aditi Avasthi. “System and method for recommending personalized content using contextualized knowledge base.” U.S. Patent Application 16/586,512, filed October 1, 2020.

- Faldu, Keyur, Achint Thomas, and Aditi Avasthi. “System and method for behavioral analysis and recommendations.” U.S. Patent Application 16/586,525, filed October 1, 2020.