The online evaluation used to assess a student’s understanding of concepts requires the questions used in the evaluation system to be tagged with concepts and other metadata like difficulty level, the time needed to solve, skills etc., that can be used to identify concepts the student is weak at or the level of her understanding with respect to that content. Typically, metadata tagging is performed manually by expert faculty. However, this is prohibitively expensive when a large dataset of questions needs to be tagged. Furthermore, human bias always creeps in when manual tagging of a dataset happens due to multiple human annotators working on different subsets of the dataset.

Embibe has developed machine learning approaches that leverage in-house manually annotated datasets and publicly available free-to-use data sources to tag metadata to questions. In this article, we look at Embibe’s Smart Tagging system for concept tagging. Embibe’s concept tagging system uses NLP/NLU to understand textual content, deep learning to extract meaning from images, and supervised and unsupervised ML algorithms to assign a ranked list of concepts with the highest probability of being relevant to a particular question.

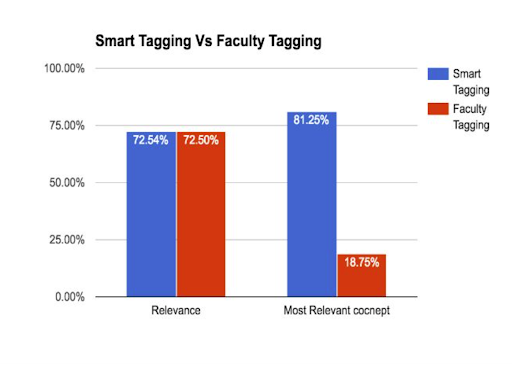

Figure 1: Results of Smart Tagging System Showing the Relevance of Concepts Tagged as well as Most Relevant Concept Tagged

The figure above shows the results of Embibe’s Smart Tagging System for concepts on a randomly chosen set of thousands of questions. We compare results between Embibe’s Smart Tagging System and crowd-sourced faculty. The ground truth dataset for these tests was created using majority voting among three independent expert faculties. The plot on the left in Figure 1 shows that Smart Tagging and crowd-sourced faculty perform at about the same rate in assigning relevant concepts — the top five most relevant concepts — to a question. The plot on the right in Figure 1 is more interesting. This shows that the most pertinent concept assigned to a question, when ordered by concept relevance score, is four times more likely to be assigned by Smart Tagging than by crowd-sourced faculty.

Implementation specifics and details of the Smart Tagging System are beyond the scope of this article; however, we will discuss the same here as we learn more about it.